In recent years, I have been writing a book on the history of water fluoridation, a practice that is dental dogma in a handful of countries, particularly in the English-speaking world. Unsurprisingly, historical inquiry reveals that the story is more complex than the triumphalist public-health narrative that justifies the practice. Like all historians, I have had to determine the temporal boundaries of the study and have chosen to concentrate on the twentieth century. In this framing, the focus is on three intertwining stories: the rampant tooth decay that afflicted a large proportion of the population of wealthy nations by the Second World War; the dramatic increase in fluoride pollution from industries such as aluminum- and phosphate-fertilizer production; and dentistry’s urgent search for a signature initiative to raise its standing within the medical and scientific communities.

Every now and then, however, I view the subject through a wider aperture. When did tooth decay become a significant problem, and why? How did humans become so vulnerable to dental deterioration, and why have we gravitated toward foods that are most likely to cause it? Learning about the long history of dental maladies involves detours into physical anthropology, while understanding their etiology requires a dive into microbiology. The overall impression is that the mouth is its own ecosystem, or microbiome, subject to the same kind of perturbations that ecologists find in ponds or forests.1

Unavoidably, as with the pond or the forest, there is a degree of arbitrariness in abstracting the oral ecosystem from the broader environment in which it is situated. The pond is part of a complex interconnected system that includes the farm and the feedlot. Similarly, the mouth, as well as being connected to the rest of the body, is subject to inputs and outputs that are frequently determined by economic circumstances and cultural preferences. Nonetheless, the ecosystem view offers a useful framing, one in which teeth are subject to long-term biocultural forces. In the process, it elucidates the historical contingency and constructedness of various dental ideas, conditions, and practices people take for granted.2

Just as a mountain range hosts different species in its various biomes and at varying altitudes, dental bacteria specialize in colonizing particular surfaces of the teeth.

The average mouth contains at any given time somewhere between seven hundred and one thousand species of microbes. Their swampy home is bathed in saliva and continuously heated to around 36°C, the odd gulp of scalding coffee or ice-cold lager notwithstanding. Saliva’s manifold properties include its ability to help remineralize enamel as well as regulating the fluctuating microbial population that dwells in our oral cavity. Somewhat counterintuitively, the most salubrious microbial home in this ecosystem is the teeth. Whereas the mouth’s mucosal surfaces are constantly peeling and flaking, thereby reducing their bacterial load, teeth do not desquamate. Hence, they provide a relatively stable surface to which a surprising variety of bacteria can adhere. Just as a mountain range hosts different species in its various biomes and at varying altitudes, dental bacteria specialize in colonizing particular surfaces of the teeth: some enjoy living in close proximity to the gumline, while others prefer the network of nooks and crannies on top of the molars. In conjunction with food and saliva, these microbes form a biofilm—known as dental plaque—that coats the teeth.3

In the good old days before the Neolithic, the microbes that inhabited plaque formed a happily balanced ecosystem, feeding on the microscopic residue of proteins, fats, and carbohydrates that evaded swallowing. While the population of different bacteria no doubt waxed and waned according to seasonal dietary variation, none ever came to dominate the rest. When one threatened to do so, like France in nineteenth-century Europe, the others quickly convened a Congress of Vienna to put it back in its place. About 10 thousand years ago, however, some members of our species rather abruptly began to increase the proportion of carbohydrates in their diet. Instead of supplementing a predominantly animal-based menu—everything from mammoths to mollusks—with some tubers and berries, these impoverished diners began to subsist largely on a few species of grass seeds. Over time, they continued to refine these foods, making them more palatable. For the carbohydrate-loving bacteria inhabiting teeth, every day was now Thanksgiving.

In the oral ecosystem, fermentable carbohydrates are like fertilizer runoff in a pond. In the latter, the sudden infusion of phosphorous causes algal blooms, which then decompose and deplete the water of oxygen, a process called eutrophication. In the mouth, fermentable carbohydrates catabolize to acids, such as lactic acid, thereby increasing the acidity of the plaque biofilm. This favors the growth of a few acid-tolerant species, such as streptococcus and lactobacillus. As they come to dominate their environment, they acidify the liquid in plaque biofilm, which then demineralizes enamel and dentin, allowing bacteria to enter the pulp and infect the tooth. The term that describes both the process of demineralization and the lesions that frequently result is dental caries. Adjectives that describe the pain of advanced tooth infection include excruciating, agonizing, and torturous. 4

Judging by evidence from skeletal remains, dental caries, although not entirely absent, was uncommon prior to the emergence of agrarian societies. When it is found in Paleolithic sites, it is generally thought to indicate that the population was beginning to make the dietary shifts characteristic of agriculturalists.5 From the Neolithic onward, the majority of skulls tend to contain teeth with at least one carious lesion. A recent study of an early farming community in Germany, for example, found that 68 percent of adults displayed the telltale wear and tear of caries. This fits with the broader anthropological pattern in which human teeth exhibit higher rates of caries over time.6

Complex carbohydrates like wheat were relatively benign compared to the caries bomb that exploded in mouths across much of the world with the onset of European imperialism and the Industrial Revolution.

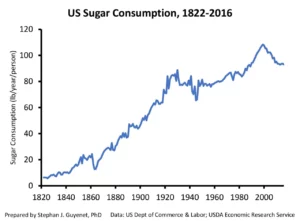

The kind of food that could feed lots of people, it turned out, upset the balance of our oral ecosystem. Regular and widespread toothache was part of the tradeoff in our species’ long-term, cereal-fueled population growth. Complex carbohydrates like wheat and rice, however, were relatively benign compared to the caries bomb that exploded in mouths across much of the world with the onset of European imperialism and the Industrial Revolution. If bread was like coal for cariogenic bacteria, refined sugar was rocket fuel. The craving for sweetness evolved in an environment of sugar scarcity, encouraging the consumption of energy-rich foods on the rare occasions they were available; it could not be turned off with the flick of a switch. Exploration, expropriation, and slavery made sugar increasingly abundant and affordable. Unsurprisingly, consumption soared, as did toothache, extraction, and widespread dental malaise of the kind the species had never before experienced.7

According to Daniel Lieberman, an evolutionary biologist at Harvard who has examined thousands of ancient skulls from all over the world, “[m]ost skulls from the last few hundred years are a dentist’s nightmare,” with cavities and abscesses increasingly numerous as one approaches the modern era.8 There is nothing to suggest this change is genetically driven. Rather, the history of dental caries involves an interplay of culture and biology in which changing dietary patterns altered our microbial makeup. Essentially, we exchanged healthy teeth for cheap and easily metabolized energy.9

While sugar is not the only cause of the modern caries epidemic, it is clearly the major one. Throughout the nineteenth century, the British were the world’s greatest sugar consumers and consequently endured the most dental distress. In the twentieth century, the United States took the lead; by 1926, US citizens and nationals were swallowing 50 kilograms of refined sugar per capita annually, a number that has held relatively steady ever since.10 In the mid-1930s, a survey by the United States Public Health Service determined that 71 percent of 12- to 14-year-olds had one or more carious teeth. This was alarming for a number of reasons. Caries was not merely a problem of childhood: it had multiple ramifications for both oral and overall health, including secondary infections in the heart and brain. Among the most important consequences from the perspective of the federal government was that an unacceptably high number of young men were failing military-recruitment physicals because they lacked enough sound teeth to adequately chew their rations.11

For early twentieth-century dentists and health officials, the solution to the caries epidemic seemed clear: stop eating so much refined sugar and starch. Then as now, the idea of achieving this seemed so remote as to be laughable. An alternative was to look to minerals and micronutrients that could fortify enamel or create an oral environment less conducive to cariogenic bacteria. After many years of research, a group of dentists and industrial chemists came to the conclusion that fluoride could play a significant role in caries control.12

In its pure form, elemental fluorine is a highly toxic pale yellow gas that reacts violently with almost all organic material. It cuts through steel and glass and burns through asbestos. It is the least stable element in the periodic table, a volatile atomic particle in constant tension with its surrounding elements, ravenously devouring their electrons to alleviate its core pressure. Fortunately, fluorine rarely exists in its pure form: nature keeps it in check by binding it with other elements, forming various compounds known as fluorides.13 In the global biogeochemical cycle, the vast majority of fluoride on the earth’s surface is spewed out by volcanoes, predominantly as hydrogen-fluoride gas. It bonds with other elements to form compounds such as calcium fluoride, sodium fluoride, and many others that are deposited in soil, rocks, and the ocean, and unevenly distributed around the planet. Since the eighteenth century, coal burning and various industrial processes such as phosphate-fertilizer production have added significant quantities of fluoride to the biogeochemical cycle. Over the same period, chemists have created numerous new fluoride compounds that are both useful to industry and dangerous pollutants.14

One of the main reasons that fluoride was an attractive solution for caries was that it would not entail reforming the entrepreneurial, fee-for-service culture that dominated US dentistry. In fact, once freed from the mundane task of filling cavities, dentists could focus on more lucrative periodontic and cosmetic procedures. Furthermore, dentistry would finally have a signature public-health initiative that would elevate the field’s overall status within the medical world. Best of all, fluoride—an abundant, highly toxic by-product of the aluminum and phosphate industries—would offer a simple, cheap remedy (albeit only a partial one) to a complex public-health problem. It was a palliative that threatened no substantial economic interests and required no significant changes in dietary practices or food production. In short, it was the classic attempt at solving a complex problem with a cheap technofix.15

In the early years of water fluoridation, in the 1940s and 1950s, its proponents pushed the practice with a fervor that mirrored the zealousness of their antifluoridation opponents.16 They insisted, for example, that the practice would reduce dental caries by 60 percent. The figure proved highly optimistic and has been revised steadily downward; health authorities now estimate a more modest reduction of 25 percent. What does this figure represent in terms of actual teeth? Each tooth has multiple surfaces that are subject to decay, equaling approximately 100 surfaces in the average human mouth. As near as we can tell (there is no hard data), the average person develops caries on about four of these surfaces per lifetime. A 25-percent reduction means that after a lifetime of drinking fluoridated water, people will experience caries on one fewer tooth surface than would otherwise have been the case. It is not nothing, but it sounds less impressive than a 25-percent reduction would suggest. Dental authorities, it seems, are not above employing the arithmetical vagaries of comparing two small numbers in order to bolster fluoridation’s reputation.17

Insomuch as fluoride does reduce dental caries, it works by being incorporated into enamel and making teeth more resistant to the acids generated by sugar-fueled microbes. It may also act as an antimicrobial agent, reducing the acid tolerance of bacteria such as streptococcus. From an ecosystem perspective, the practice is analogous to the monocrop agriculture that is emblematic of modern food systems: artificial fertilizer promotes growth and pesticides kill insects that threaten the crops. In the oral environment, fluoride performs both functions (albeit with less efficacy), aiding in the formation of new enamel and helping destroy the dominant bacteria—the product of monoculture-based diets—that threaten to damage it.

Just as agricultural chemicals pollute the environment with toxins, fluoride—even at the putatively safe levels recommended by dental-health authorities—causes an array of problems in the corporeal ecosystem. For example, early fluoride researchers were concerned about potential bone damage and cataracts that appeared to occur at higher rates in some communities with elevated levels of fluoride in their drinking water. The most visible harm is a dental condition that played a vital role in the discovery of fluoride in some water supplies. In the early twentieth century, dentists were flummoxed by the fact that people in certain communities in Colorado and Texas suffered from badly stained enamel. This, it turned out, was dental fluorosis, a sign of poisoning due to high levels of naturally occurring fluoride in drinking water.

The same communities, however, had relatively low rates of dental caries. After several surveys, dental researchers determined that at one part per million (ppm), fluoridated drinking water appeared to reduce caries rates without significantly staining teeth. But the margin was fine: at 1.5 ppm and above, dental fluorosis began to appear. The difference was the equivalent of a few extra glasses of water per day and did not take into account fluoride from other sources, such as industrial pollution and various foods and beverages. Unsurprisingly, the onset of artificial water fluoridation saw a sharp uptick in rates of dental fluorosis.

Fluoridated drinking water appeared to reduce caries rates without significantly staining teeth. But the margin was fine: at 1.5 ppm and above, dental fluorosis began to appear.

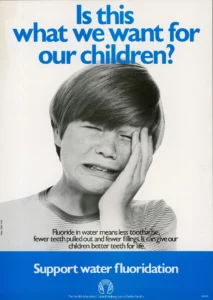

Given how desperate dentists and health authorities were to tackle the caries epidemic, one can understand why they were drawn to a solution that in retrospect—and for many at the time—seems problematic. By dosing the water supply they were attempting to thread the needle between fluoride’s potential benefits and its demonstrable harm. However, before they could begin, fluoridation’s mid-twentieth-century proponents needed to convince a skeptical public that a substance it had hitherto thought of as the main ingredient in rat poison could be safely imbibed on a daily basis. They did this—very successfully, it must be said—by naturalizing fluoride. As Trendley Dean, the so-called father of fluoridation, put it: “fluoridation of public water supplies simulates a purely natural phenomenon—a prophylaxis which Nature has clearly outlined in those communities that are fortunate enough to have about one part per million of fluoride naturally present in the public water supply.”18

Robert Kehoe, a well-known toxicologist at the automobile and chemical industry-funded Kettering Laboratory in Cincinnati, also played an important role in downplaying fluoride’s toxicity. He did so by employing the same playbook he had used to justify the continued use of lead in gasoline and paint: both substances occurred naturally and were part of the constant chemical background of life on Earth. Thus, humans were used to them, and putting a little more into the environment would do no significant harm.19

Naturalizing fluoride brought peace of mind to those who might otherwise have been skeptical of water fluoridation. Dr. Benjamin Spock, the celebrity pediatrician who authored the bestseller The Commonsense Book of Baby and Child Care and who became a prominent spokesman for fluoridation, is a good example. Spock conceded that his support ran counter to his general suspicion of chemical additives: “I’ve always been against the pollution of the diet by the addition of salt and sugar, additives and preservatives to foods consumed by adults as well as children . . . And I’ve always been against the imposition of regulations on people in an arbitrary, undemocratic manner.” Spock also admitted that he was a fluoridation skeptic in the 1940s, but by the late 1950s, he had become chairman of a national committee to educate the public about the value and safety of fluoridation. “What particularly allayed my early doubts about adding a chemical to the public water supplies,” he later told readers, “was learning that fluoride has always occurred naturally in water supplies, in various concentrations ranging from seven parts per million in some regions of the Southwest to a mere twentieth of a part per million in some regions of the Northeast. It is a natural, though varying, ingredient in water.”20

In retrospect, Spock’s fluoride flip looks astonishingly gullible. As Kehoe would have happily told him, lead occurs naturally in soil, arsenic occurs naturally in water, and sulfur dioxide occurs naturally in air. Nevertheless, you would not want to be forced to swallow or inhale any of them. And where fluoride is naturally present in water, it is almost never at what dentists consider the optimum level. It is either too little to stave off streptococci or so much that it causes dental fluorosis, or in severe cases, debilitating skeletal fluorosis.21 Spock’s credulity is a testament to the rhetorical power of what Lorraine Daston and Fernando Vidal refer to as “the moral authority of nature”: a “trick that consists in smuggling certain items . . . back and forth across the boundary that separates the natural and the social,” thereby imparting “universality, firmness, even necessity—in short, authority—to the social.”22

Spock and other proponents also seemed oblivious to—and strangely uncurious about—the source of all the fluoride that was needed to fluoridate thousands of water systems throughout the United States and beyond. Far from the pharmaceutical grade fluoride that was later added to toothpaste, the substance drip-fed into municipal water supplies is a raw and highly toxic by-product of the phosphate-fertilizer industry.23 America has always preferred its public health policies on the cheap, and on that score fluorosilicic acid waste certainly delivers. Unfortunately, studies show that fluoride derived from fertilizer production contains metal contaminants, such as arsenic and cadmium, that vary by batch. Yet nobody tests for these contaminants before the chemicals are disseminated into drinking water.24

Far from the pharmaceutical grade fluoride that was later added to toothpaste, the substance drip-fed into municipal water supplies is a raw and highly toxic by-product of the phosphate-fertilizer industry.

Environmentalists are usually the first to sound the alarm when toxic substances are introduced into the water. Yet when it comes to fluoridation, many turn a blind eye, asserting the trustworthiness of institutions that in not dissimilar circumstances they frequently sue.25 What explains this reticence? It is mostly a matter of timing. As environmentalism started to gain political traction in the 1960s and 1970s, fluoridation already enjoyed enormous support among the scientific and policy elite in the Anglo-American world. Furthermore, opponents had been successfully (and in some cases, not undeservedly) stereotyped as Strangelovian kooks: irrational extremists who saw fluoridation as a nefarious communist plot. Expressing skepticism toward fluoridation thus became an increasingly hazardous act if one cared about one’s scientific credibility.26

Despite the fact that the world’s most influential nation supported the practice, water fluoridation did not readily gain traction in other parts of the world, particularly beyond the Anglosphere. Even today, many people in countries like the United States and Australia are surprised to learn that water fluoridation is practiced in only a handful of places.27 Most European nations prohibit it. There is nothing to suggest that these countries have had worse dental-health outcomes.28 To the contrary, World Health Organization data shows that countries like Sweden, the Netherlands, and France have decreased dental caries at the same rate as the United States but without the concomitant increase in dental fluorosis.29

Recent events suggest that the fluoridation consensus may be beginning to fray. Studies indicate that even at relatively low levels, fluoride is a neurotoxin. Children raised in fluoridated areas in Canada have IQ scores that are 4.5 points lower than their non-fluoridated cohorts.30 In the 1970s, research linking a 4-point reduction in IQ to lead poisoning was enough to finally convince US authorities to ban the practice of adding lead to gasoline and paint.31 It is possible that fluoride may be approaching a similar tipping point: in March 2023, after a lawsuit by fluoridation skeptics, the federal government was forced to release a draft report by the National Toxicology Program, a branch of the National Institutes of Health, which casts further suspicion on fluoride’s impact on the brain.32 While not yet conclusive, the body of evidence regarding fluoride’s neurotoxicity is becoming increasingly difficult to ignore.33

A small but significant IQ reduction constitutes exactly the kind of elusive effect that fluoridation skeptics warned about from the start. No one was expecting bodies in the street. Rather, opponents in the scientific and medical communities who understood fluoride’s biological effects worried that long-term exposure would catalyze an array of chronic conditions that would be hard to distinguish from other illnesses, like arthritis or kidney disease, and that would be difficult to directly attribute to water fluoridation. Such concerns, combined with the unimpressive evidence for water fluoridation’s efficacy, makes the practice look increasingly like a relic of a bygone age, an era of incautious scientific optimism summed up by the mid-twentieth-century DuPont advertising slogan, “better living through chemistry.”

Teeth, particularly those that reside in fluoridating nations, are now firmly part of the agro-industrial system that has transformed much of the planet. Although it is not a pretty picture, there is a kind of satisfying circle-of-life aspect to it: industrial fertilizer grows industrial crops that produce industrial levels of dental caries, which get treated with the waste product of industrial fertilizer. Unsurprisingly, it is hard to write the environmental history of the mouth as anything but a declensionist narrative. In addition to record levels of caries, our modern diets and lifestyles have also given rise to impacted wisdom teeth, malocclusion, weak jaws, small mouths, and altered craniofacial structure.34

As with so many aspects of human health, the cure for our ills lies tantalizingly within reach—in this case through relatively simple dietary and lifestyle changes. And yet, for all the usual reasons—capitalism, inequality, politics, vested interests—the pessimistic historian in me feels that we may never quite grasp it. In which case, water fluoridation, like other suboptimal health schemes, will likely persist. The only foreseeable way that the dental-health community in the Anglosphere might reconsider the practice is if further neurological research continues to demonstrate a robust relationship between fluoride consumption and children’s IQs. The fluoridation narrative will be difficult to sustain if parents begin to ask their dentists and doctors: “Why should I risk my child’s neurological health just to slightly reduce her chances of getting a cavity?” If that were to occur, then countries like the United States might finally have to admit that tackling dental caries entails more than merely dosing drinking water with a chemical: it requires a sustained, multipronged approach that includes dietary change, reforms in agriculture and food policy, and universal access to dental care. Such actions would have health benefits that go well beyond dental caries. Politically, it will be a tough slog. If it is to happen, however, historicizing the triumphalist narrative of water fluoridation is an important first step.

By Frank Zelko (University of Hawaii)

*Original full-text article online at: https://springs-rcc.org/in-the-teeth-of-history/